DIAMBRA Docs

DIAMBRA Arena

Index

Overview

DIAMBRA Arena is a software package featuring a collection of high-quality environments for Reinforcement Learning research and experimentation. It provides a standard interface to popular arcade emulated video games, offering a Python API fully compliant with OpenAI Gym/Gymnasium format, that makes its adoption smooth and straightforward.

It supports all major Operating Systems (Linux, Windows and MacOS) and can be easily installed via Python PIP, as described in the installation section below. It is completely free to use, the user only needs to register on the official website.

In addition, its GitHub repository provides a collection of examples covering main use cases of interest that can be run in just a few steps.

Main Features

All environments are episodic Reinforcement Learning tasks, with discrete actions (gamepad buttons) and observations composed by screen pixels plus additional numerical data (RAM values like characters health bars or characters stage side).

They all support both single player (1P) as well as two players (2P) mode, making them the perfect resource to explore all the following Reinforcement Learning subfields:

Available Games

Interfaced games have been selected among the most popular fighting retro-games. While sharing the same fundamental mechanics, they provide different challenges, with specific features such as different type and number of characters, how to perform combos, health bars recharging, etc.

Whenever possible, games are released with all hidden/bonus characters unlocked.

Additional details can be found in their dedicated section.

Installation

Register on our website, it requires just a few clicks and is 100% free

Install Docker Desktop (Linux | Windows | MacOS) and make sure you have permissions to run it (see here). On Linux, it’s usually enough to run

sudo usermod -aG docker $USER, log out and log back in.Install DIAMBRA Command Line Interface:

python3 -m pip install diambra. This command should find the proper binary for your OS architecture, but in case it does not, you can manually download and install it from the GitHub releases.Install DIAMBRA Arena:

python3 -m pip install diambra-arena

Using a virtual environment to isolate your python packages installation is strongly suggested

Quickstart

Download Game ROM(s) and Check Validity

Check available games with the following command:

diambra arena list-roms

Output example:

[...]

Title: Dead Or Alive ++ - GameId: doapp

Difficulty levels: Min 1 - Max 4

SHA256 sum: d95855c7d8596a90f0b8ca15725686567d767a9a3f93a8896b489a160e705c4e

Original ROM name: doapp.zip

Search keywords: ['DEAD OR ALIVE ++ [JAPAN]', 'dead-or-alive-japan', '80781', 'wowroms']

Characters list: ['Kasumi', 'Zack', 'Hayabusa', 'Bayman', 'Lei-Fang', 'Raidou', 'Gen-Fu', 'Tina', 'Bass', 'Jann-Lee', 'Ayane']

[...]

If you are using Windows 10 “N” editions and get this error ImportError: DLL load failed while importing cv2, you might need to install the “Media Feature Pack”.

Search ROMs on the web using Search Keywords provided by the game list command reported above. Pay attention, follow game-specific notes reported there, and store all ROMs in the same folder, whose absolute path will be referred in the following as /absolute/path/to/roms/folder/.

Specific game ROM files are required, check validity of the downloaded ROMs as follows.

Check ROM(s) validity running:

diambra arena check-roms /absolute/path/to/roms/folder/romFileName.zip

The output for a valid ROM file would look like the following:

Correct ROM file for Dead Or Alive ++, sha256 = d95855c7d8596a90f0b8ca15725686567d767a9a3f93a8896b489a160e705c4e

Make sure to check out our Terms of Use, and in particular Section 7. By using the software, you accept the in full.

Base script

A Python script to run a complete episode with a random agent requires just a few lines:

#!/usr/bin/env python3

import diambra.arena

def main():

# Environment creation

env = diambra.arena.make("doapp", render_mode="human")

# Environment reset

observation, info = env.reset(seed=42)

# Agent-Environment interaction loop

while True:

# (Optional) Environment rendering

env.render()

# Action random sampling

actions = env.action_space.sample()

# Environment stepping

observation, reward, terminated, truncated, info = env.step(actions)

# Episode end (Done condition) check

if terminated or truncated:

observation, info = env.reset()

break

# Environment shutdown

env.close()

# Return success

return 0

if __name__ == '__main__':

main()To execute the script run:

diambra run -r /absolute/path/to/roms/folder/ python script.py

To avoid specifying ROMs path at every run, you can define the environment variable DIAMBRAROMSPATH=/absolute/path/to/roms/folder/, either temporarily in your current shell/prompt session, or permanently in your profile (e.g. on linux in ~/.bashrc).

Examples

We provide multiple examples covering the most important use-cases, that can be used as templates and starting points to explore all the features of the software package.

They show how to leverage both single and two players modes, how to set up environment wrappers with all their options, how to record human expert demonstrations and how to load them to apply imitation learning.

Every example has a dedicated page in this documentation, and the source code is available in the code repository.

RL Libs Compatibility & State-of-the-Art Agents

DIAMBRA Arena is built to maximize compatibility will all major Reinforcement Learning libraries. It natively provides interfaces with the two most important packages: Stable Baselines 3 and Ray RLlib, while Stable Baselines is also available but deprecated. Their usage is illustrated in detail in the dedicated section of this documentation and in the DIAMBRA Agents repository. It can easily be interfaced with any other package in a similar way.

Native interfaces, installed with the specific options listed below, are tested with the following versions:

- Stable Baselines 3 (2.1.*) |

pip install diambra-arena[stable-baselines3]Docs-GitHub-Pypi - Ray RLlib (2.7.*) |

pip install diambra-arena[ray-rllib]Docs-GitHub-Pypi - Stable Baselines (2.10.2) |

pip install diambra-arena[stable-baselines]Docs-GitHub-Pypi

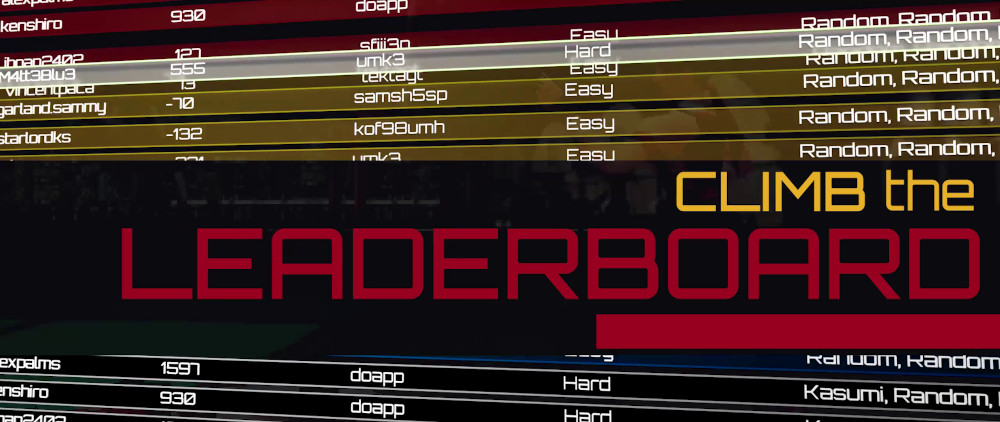

Competition Platform

Our competition platform allows you to submit your agents and compete with other coders around the globe in epic video games tournaments!

It features a public global leaderboard where users are ranked by the best score achieved by their agents in our different environments.

It also offers you the possibility to unlock cool achievements depending on the performances of your agent.

Submitted agents are evaluated and their episodes are streamed on our Twitch channel.

We aimed at making the submission process as smooth as possible, try it now!

Docs Structure

Support, Feature Requests & Bugs Reports

To receive support, use the dedicated channel in our Discord Server.

To request features or report bugs, use GitHub discussions and issue trackers for the repositories:

References

- Website: https://diambra.ai

- GitHub: https://github.com/diambra/

- Paper: https://arxiv.org/abs/2210.10595

- Linkedin: https://www.linkedin.com/company/diambra

- Discord: https://diambra.ai/discord

- Twitch: https://www.twitch.tv/diambra_ai

- YouTube: https://www.youtube.com/c/diambra_ai

- Twitter: https://twitter.com/diambra_ai

Citation

Paper: https://arxiv.org/abs/2210.10595

@article{Palmas22,

author = {{Palmas}, Alessandro},

title = "{DIAMBRA Arena: a New Reinforcement Learning Platform for Research and Experimentation}",

journal = {arXiv e-prints},

keywords = {reinforcement learning, transfer learning, multi-agent, games},

year = 2022,

month = oct,

eid = {arXiv:2210.10595},

pages = {arXiv:2210.10595},

archivePrefix = {arXiv},

eprint = {2210.10595},

primaryClass = {cs.AI}

}

Terms of Use

DIAMBRA Arena software package is subject to our Terms of Use. By using it, you accept them in full.