Hands-on Reinforcement Learning

Index

What is the best path leading a passionate coder to the creation of a trained AI agent capable of effectively playing a video game? It consists in two steps: learning reinforcement learning and applying it.

Learning RL section below deals with how to get started with RL: it presents resources that cover from the basics up to the most advanced details of the latest, best-performing algorithms.

Then, in the End-to-end Deep Reinforcement Learning section, some of the most important tech tools are presented together with a step-by-step guide showing how to successfully train a Deep RL agent in our environments.

Learning Reinforcement Learning

Books

The first suggested step is to learn the basics of Reinforcement Learning. The best option to do so is Sutton & Barto’s book “Reinforcement Learning: An Introduction”, that can be considered the reference text for the field. An additional option is Packt’s “The Reinforcement Learning Workshop” that covers theory but also a good amount of practice, being very hands-on and complemented by a GitHub repo with worked exercises.

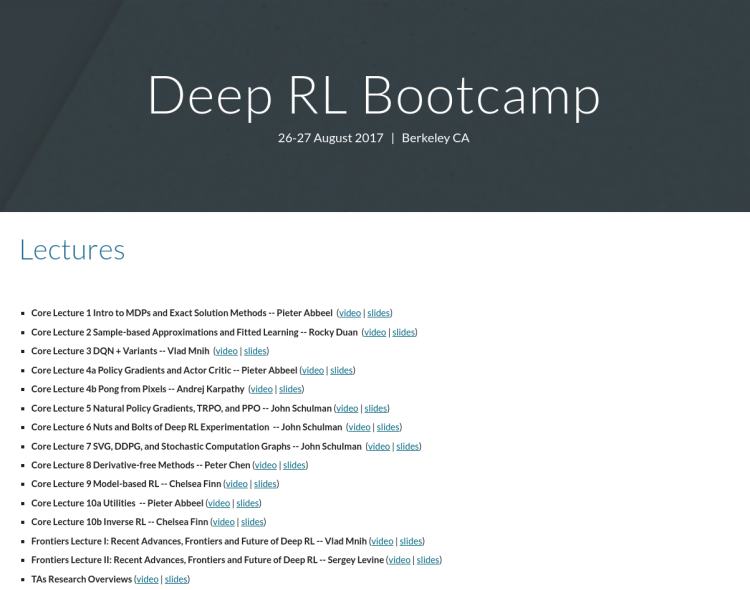

Courses / Video-lectures

An additional useful resource is represented by courses and/or video-lectures. The three listed in this paragraph, in particular, are extremely valuable. The first one, “DeepMind Reinforcement Learning Lectures at University College London”, is a collection of lectures dealing with RL in general, as Sutton & Barto’s book, providing the solid foundations of the field. The second one, “OpenAI Spinning Up with Deep RL”, is a very useful website providing a step-by-step primer focused on Deep RL, guiding the reader from the basics to understanding the most important algorithms down to the implementation details. The third one, “Berkeley Deep RL Bootcamp”, provides video and slides dealing specifically with Deep RL too, and presents a wide overview of the most important, state-of-the-art methods in the field. These are all extremely useful and available for free.

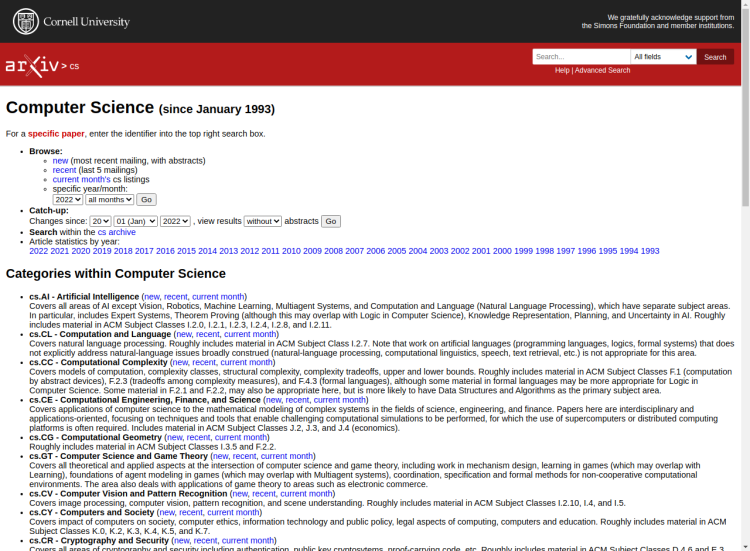

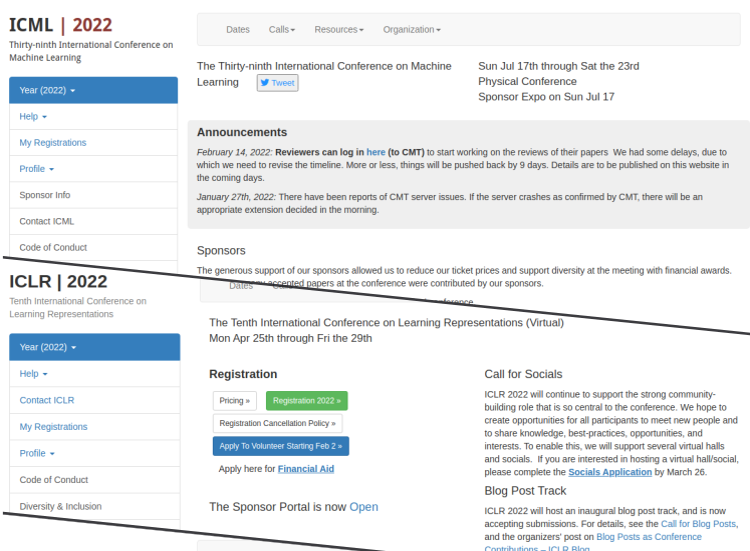

Research Publications

After having acquired solid fundamentals, as usual in the whole ML domain, one should rely on publications to keep the pace of field advancements. Conference papers, peer-reviewed journal and open access publications are all options to consider.

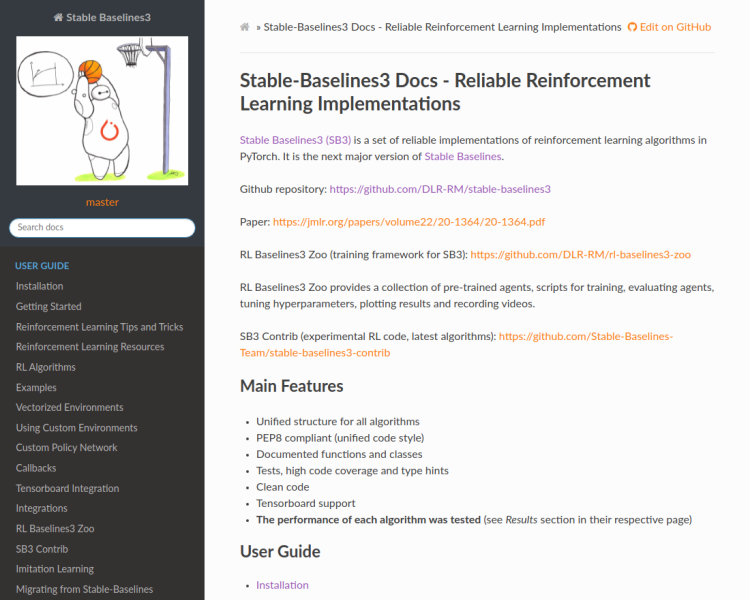

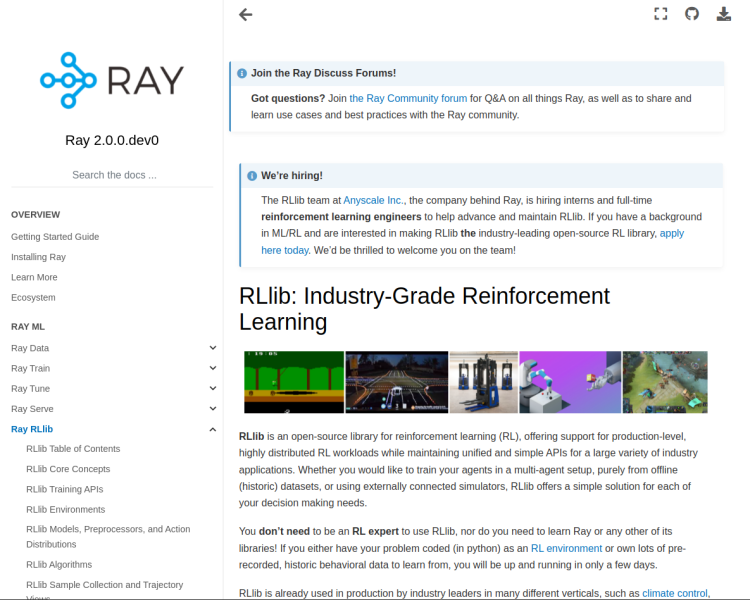

A good starting point is to read the reference paper for all state-of-the-art algorithms implemented in the most important RL libraries (see next section), as found for example here (SB3) and here (RAY RLlib).

More

Finally, additional sources of useful information to better understand this field, and to get inspired by its great potential, are documentaries presenting notable milestones achieved by some of the best AI labs in the world. They showcase reinforcement learning masterpieces, such as AlphaGo/AlphaZero, OpenAI Five and Gran Turismo Sophy, mastering the games of Go, DOTA 2 and Gran Turismo® 7 respectively.

End-to-End Deep Reinforcement Learning

Reinforcement Learning Libraries

If one wants to rely on already implemented RL algorithms, focusing his efforts on higher level aspects such as policy network architecture, features selection, hyper-parameters tuning, and so on, the best choice is to leverage state-of-the-art RL libraries as the ones shown below. There are many different options, here we list those that, in our experience, are recognized as the leaders in the field, and have been proven to achieve good performances in DIAMBRA Arena environments.

There are multiple advantages related to the use of these libraries, to name a few: they provide high quality RL algorithms, efficiently implemented and continuously tested, they allow to natively parallelize environment execution, and in some cases they even support distributed training using multiple GPUs in a single workstation or even in cluster contexts.

The next section provides guidance and examples using some of the options listed down here.

Creating an Agent

All the examples presented in these sections (plus additional code) showing how to interface DIAMBRA Arena with the major reinforcement learning libraries, can be found in our open source repository DIAMBRA Agents.

Scripted Agents

The classical way to create an agent able to play a game is to hand-code the rules governing its behavior. These rules can vary from very simple heuristics to very complex behavioral trees, but they all have in common the need of an expert coder that knows the game and is able to distill the key elements of it to craft the scripted bot.

The following are two examples of (very simple) scripted agents interfaced with our environments, and they are available here: DIAMBRA Agents - Basic.

No-Action Agent

This agent simply performs the “No-Action” action at every step. By convention it is the action with index 0, and it needs to be a single value for Discrete action spaces, and a tuple of 0s for MultiDiscrete ones, as shown in the snippet below.

#!/usr/bin/env python3

import diambra.arena

from diambra.arena.utils.gym_utils import env_spaces_summary, available_games

import random

import argparse

def main(game_id="random"):

game_dict = available_games(False)

if game_id == "random":

game_id = random.sample(game_dict.keys(),1)[0]

else:

game_id = opt.gameId if opt.gameId in game_dict.keys() else random.sample(game_dict.keys(),1)[0]

# Settings

settings = {

"player": "P2",

"step_ratio": 6,

"frame_shape": (128, 128, 1),

"hardcore": False,

"difficulty": 4,

"characters": ("Random"),

"char_outfits": 1,

"action_space": "multi_discrete",

"attack_but_combination": False

}

env = diambra.arena.make(game_id, settings)

env_spaces_summary(env)

observation = env.reset()

env.show_obs(observation)

while True:

action = 0 if settings["action_space"] == "discrete" else [0, 0]

observation, reward, done, info = env.step(action)

env.show_obs(observation)

print("Reward: {}".format(reward))

print("Done: {}".format(done))

print("Info: {}".format(info))

if done:

observation = env.reset()

env.show_obs(observation)

if info["env_done"]:

break

# Close the environment

env.close()

# Return success

return 0

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('--gameId', type=str, default="random", help='Game ID')

opt = parser.parse_args()

print(opt)

main(opt.gameId)

Random Agent

This agent simply performs a random action at every step. In this case, the sampling method takes care of generating an action that is consistent with the environment action space.

#!/usr/bin/env python3

import diambra.arena

from diambra.arena.utils.gym_utils import env_spaces_summary, available_games

import random

import argparse

def main(game_id="random"):

game_dict = available_games(False)

if game_id == "random":

game_id = random.sample(game_dict.keys(),1)[0]

else:

game_id = opt.gameId if opt.gameId in game_dict.keys() else random.sample(game_dict.keys(),1)[0]

# Settings

settings = {

"player": "P2",

"step_ratio": 6,

"frame_shape": (128, 128, 1),

"hardcore": False,

"difficulty": 4,

"characters": ("Random"),

"char_outfits": 1,

"action_space": "multi_discrete",

"attack_but_combination": False

}

env = diambra.arena.make(game_id, settings)

env_spaces_summary(env)

observation = env.reset()

env.show_obs(observation)

while True:

actions = env.action_space.sample()

observation, reward, done, info = env.step(actions)

env.show_obs(observation)

print("Reward: {}".format(reward))

print("Done: {}".format(done))

print("Info: {}".format(info))

if done:

observation = env.reset()

env.show_obs(observation)

if info["env_done"]:

break

# Close the environment

env.close()

# Return success

return 0

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument('--gameId', type=str, default="random", help='Game ID')

opt = parser.parse_args()

print(opt)

main(opt.gameId)

More complex scripts can be built in similar ways, for example continuously performing user-defined combos moves, or adding some more complex choice mechanics. But this would still require to decide the tactics in advance, properly translating knowledge into code. A different approach would be to leverage reinforcement learning, so that the agent will improve leveraging its own experience.

DeepRL Trained Agents

An alternative approach to scripted agents is adopting reinforcement learning, and the following sections provide examples on how to do that with the most important libraries in the domain.

DIAMBRA Arena natively provides interfaces to both Stable Baselines 3 and Ray RLlib, allowing to easily train models with them on our environments. Each library-dedicated page presents some basic and advanced examples.

DIAMBRA Arena provides a working interface with Stable Baselines 2 too, but it is deprecated and will be discontinued in the near future.